I, (not) Robot, or am I

Passing (or failing?) a reverse Turing test

Maybe instead of being simultaneously alive and dead like Schrödinger’s cat I am simultaneously human and robot?

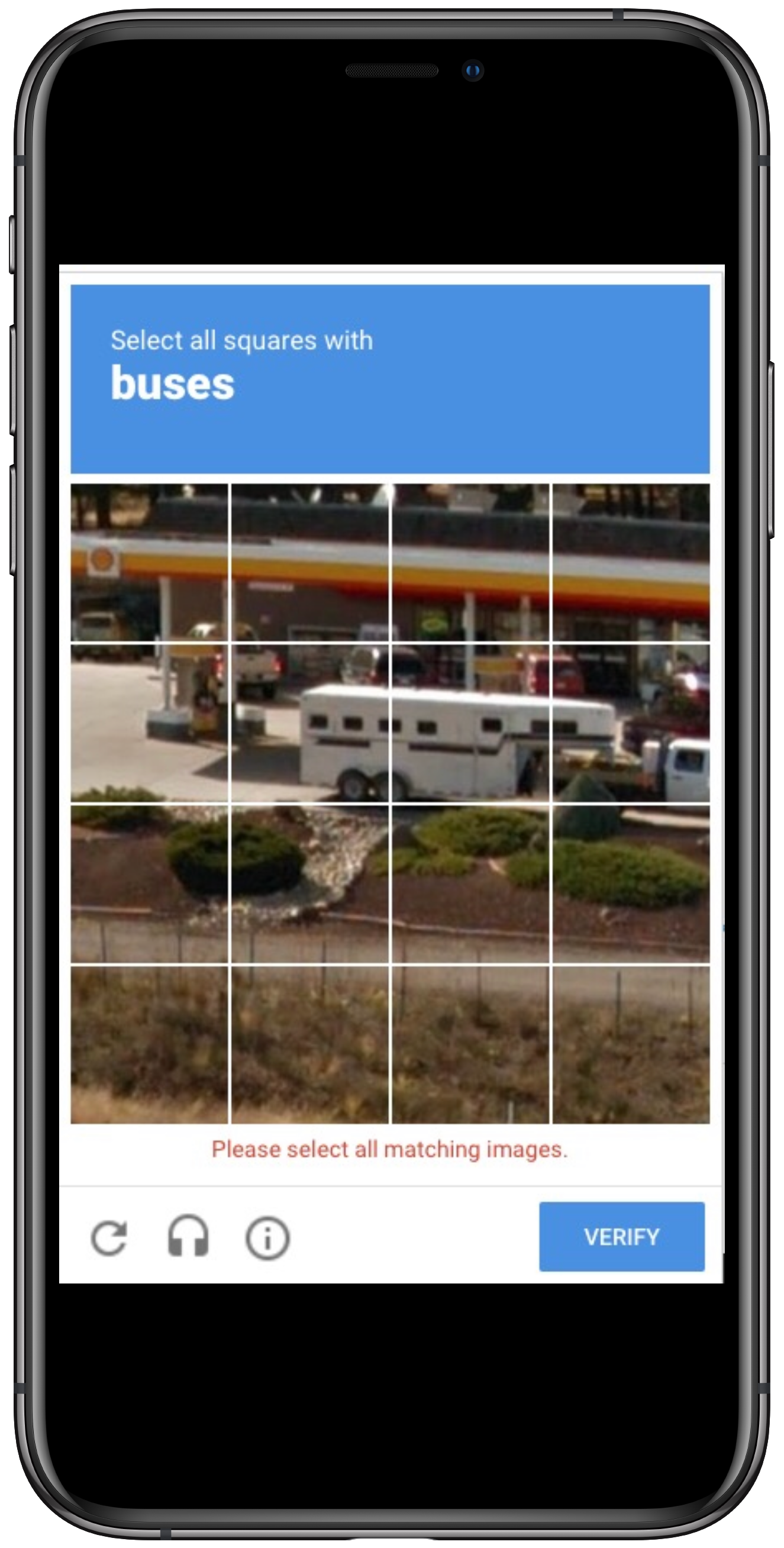

The other day I wasn’t human until I agreed with a robot that a horse trailer was a bus. A machine learning algorithm insisted on this logical fallacy in order for me to access my AT&T wireless account but it could have been any number of other services.

To read more, select all squares with buses below.

Except there are no buses in the image. Fail. There is clearly a horse trailer but there is no bus. Tapping Verify with no selections did not work. The machine learning algorithm insisted on selection of the three squares containing the horse trailer before “verifying” that I was human.

What’s a human (robot) to do?

By now it is likely that everyone has run into a CAPTCHA on the Internet but I bet most don’t know that CAPTCHA is an acronym for “Completely Automated Public Turing test to tell Computers and Humans Apart”.

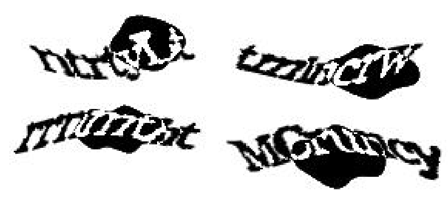

According to Wikipedia, the most common form requires the user type the letters of a distorted image. I despise this form. The images have become more distorted over the years as artificial intelligence technology can solve even the most difficult variant of distorted text at 99.8% accuracy which based on my own experience is more accurate than any human.

Just look at those CAPTCHA images that were correctly solved by a machine learning algorithm. I’m not sure I can solve a single one.

A Turing test is conceived as having a human judge and a computer subject which attempts to appear human. The test is whether the computer (robot) can pass as a human and convince the judge that the computer (robot) is human. A reverse Turing test reverses the objective or roles between humans and computer. In a reverse Turing test the computer is the judge. Using Google’s modern reCAPTCHA system, known as the “No CAPTCHA reCAPTCHA” the computer simply asks if you are human by stating “I’m’ not a robot” and expecting the user to check the check-box indicating the user is human (user != robot).

When Google rolled this new system out there was much hype that a significant number of users would be able to securely and easily verify they’re human without actually having to solve a CAPTCHA. With just a single click they could confirm they are not a robot. Except…Google also developed “Advanced Risk Analysis” on the back end for reCAPTCHA that considers a user’s entire engagement with the CAPTCHA –before, during, and after– to determine whether the user is a human.

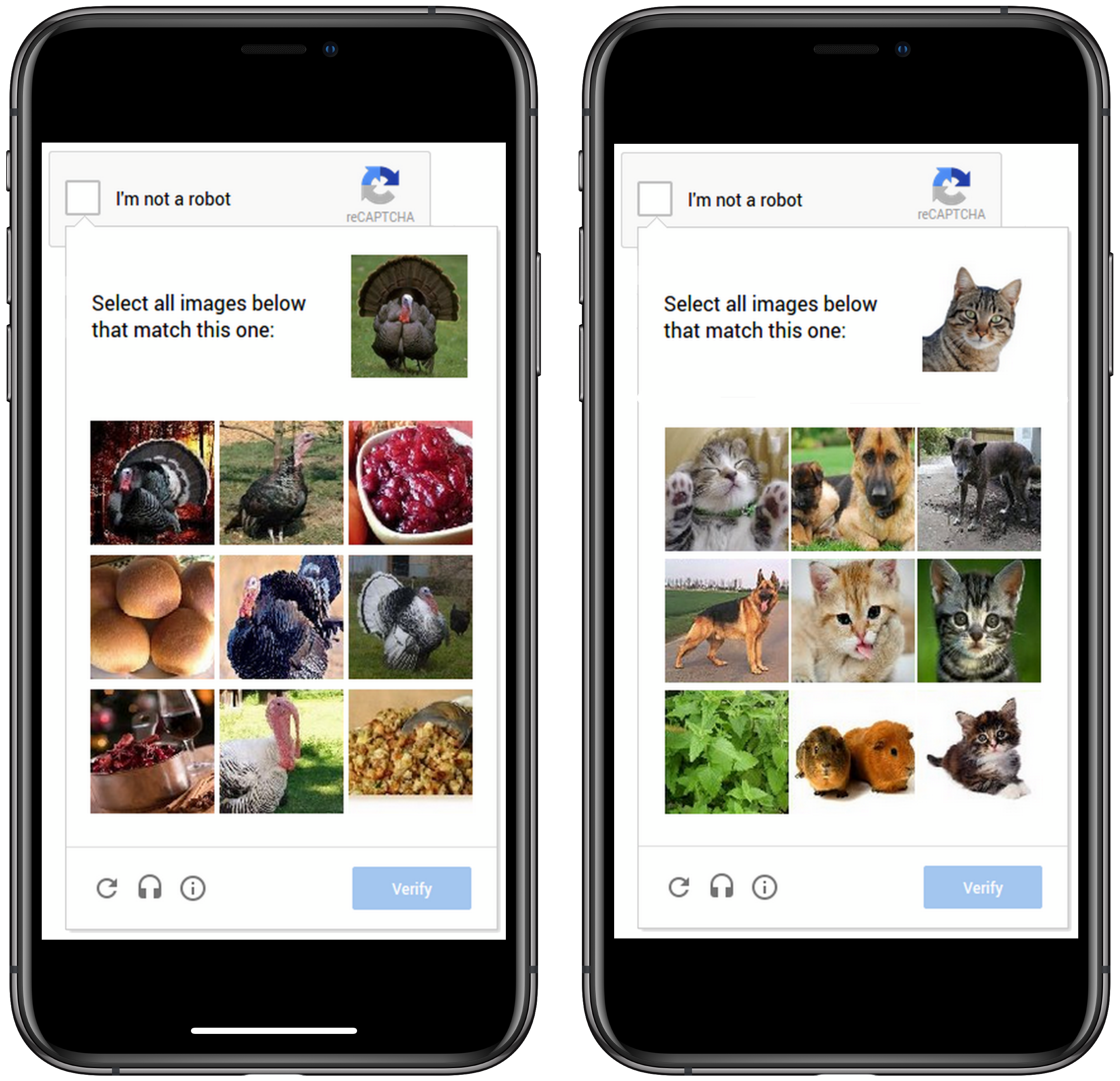

In can be no surprise that we are now at the point where due to advanced risk analysis and the ever increasing capabilities of bots, humans (users) are often presented with challenges that are supposedly easier for humans to use, particularly on mobile devices. Increasingly these reCAPTCHA images are based on a classic computer vision problem of image labeling. For this version of the challenge the user is asked to select all of the images that correspond with the clue. The theory being that it is much easier to tap photos of traffic lights or crosswalks or cats or turkeys than to tediously type a line of distorted text on your phone.

Go ahead. You can do it. Select all the buses.